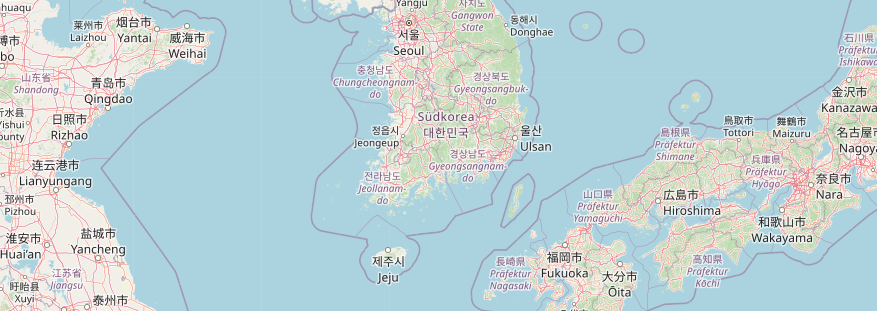

Following this tweet about a request of localized maps on osm.org I would like to share some thoughts on this topic.

My first versions of the localization code used in German style dates back to 2012. Back then I had the exact same problem as Laurence using OSM based maps in regions of the world where Latin script is not the norm and thus I started developing the localization code for German style.

Fortunately I was able to improve this code in December 2015 as part of a research project during my day job.

I also gave some talks about it in 2016 at FOSSGIS and FOSS4G conferences.

Recordings and slides of these talks are available at the l10n wiki.

Map localization seems to be mostly unprecedented in traditional GIS applications as before Openstreetmap there was no such thing as a global dataset of geographical data.

Contrary to my initial thought doing localization “good enough” is not an easy task and I learned a lot of stuff about writing systems that in fact I not even wanted to know.

What I intend to share here is basically the dos and don’ts of map localization.

Currently my code is implemented mostly as PostgreSQL shared procedures, which was a good idea back in 2012 when rendering almost always involved PostgreSQL/PostGIS at some stage anyway. This will likely change in a vector tile only tool chain used in future. To take this into account in the meantime I also have a proof of concept implementation written in python.

So what is the current state of affairs?

Basically there are two functions which will output either a localized street name or place name using an associative array of tags and a geometry object as input. In the output street names are separated by “-” while place names are usually two-line strings. Additionally street names are abbreviated whenever possible (if I know how to do this in a particular language). Feel free to send patches if you language does not contain abbreviations yet!

Initialy I used to put the localized name in parenthesis, but this is not a very good idea for various reasons. First of all which one would be the correct name to put in parenthesis? And even more important, what would one do in the case of scripts like arabic or hebrew? So I finaly got rid of the parenthesis altogether.

What else does the code in which way and whats the rationale behind it?

There are various regions of the world with more than one official language. In those regions the generic name tag will usually contain both names which will just make sense if only this tag is rendered like osm carto does.

So what to do in those cases?

Well if the desired target language name is part of the generic name tag just use this one and avoid duplicates at any cost! As an example lets take Bolzano/Bozen in the autonomous province South Tyrol. Official languages there are Italian and German thus the generic name tag will be “Bolzano – Bozen”. Doing some search magic in various name tags we will end up using “Bolzano\nBozen” in German localization and using “Bolzano – Bozen” unaltered in English localization because there is no name:en tag.

But what to do if name contains non latin scripts?

The main rationale behind my whole code is that the mapper is always right and that automatic transcription should be only used as a last resort.

This said please do not tag transcriptions as localized names in any case because they will be redundant at best and plain wrong at worst. This is a job that computers should be able to do better. Also do never map automated transcriptions.

Transcriptions might be mapped in cases when they are printed on an official place-name sign. Please use the appropriate tag like name:jp_rm or name:ko-Latn in this case and not something like name:en or name:de.

(Image ©Heinrich Damm Wikimedia Commons CC BY-SA 3.0)

Correct tagging (IMO) should be:

name=ถนนเยาวราช

name:th=ถนนเยาวราช

name:th-Latn=thanon yaoverat

name:en=CHINA TOWN

So a few final words to transcription and the code currently in use. Please keep in mind that transcription is always done as a last resort only in case when there are no suitable name-tags on the object.

Some of the readers may already know the difference between transcription and transliteration. Nevertheless some may not so I will explain it. While transliteration is fully reversible transcription might not always be. So in case of rendered maps transcription is likely what we want to have because we do not care about a reversible algorithm in this case.

First I started with a rather naive approach. I just used the Any-Latin transliteration code from libicu. Unfortunately this was not a very good idea in a couple of cases thus I went for a little bit more sophisticated approach.

So here is how the current code performs transcription:

- Call a function to get the country where the object is located at

(This function is actually based on a database table from nominatim)

- If the country in question is one with a country specific transcription algorithm go for this one and use libicu otherwise.

Currently in Japan kakasi is used instead of libicu in order to avoid chinese transcriptions and in Thailand some python code is used because libicu uses a rarely used ISO standard transliteration instead of the more common Royal Thai General System of Transcription (RTGS).

There are still a couple of other issues. The most notable one is likely the fact, that transcription of arabic is far from perfect as vowels are usually not part of names in this case. Furthermore transcription based on pronunciation is difficult as arabic script is used for very different languages.

So where to go from here?

Having localized rendering on osm.org for every requested language is unrealistic using the current technology as any additional language will double the effort of map rendering. Although my current code might even produce some strange results when non-latin output languages are selected.

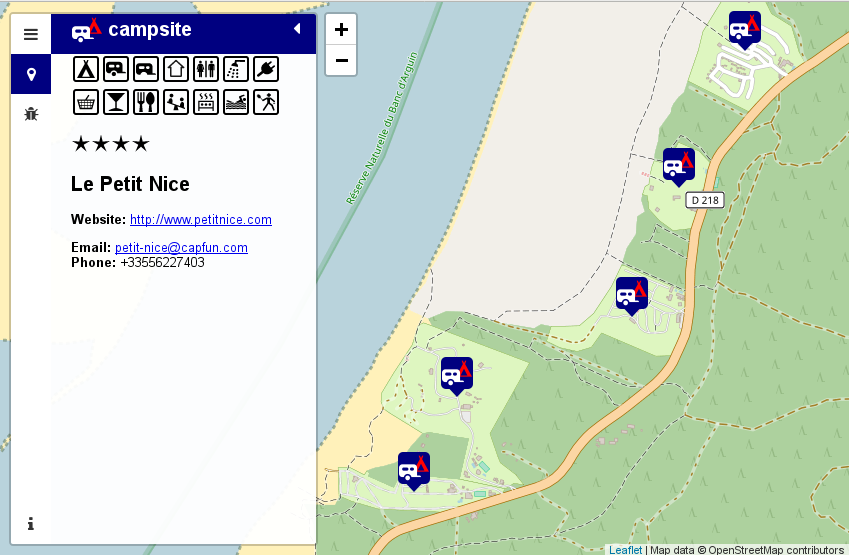

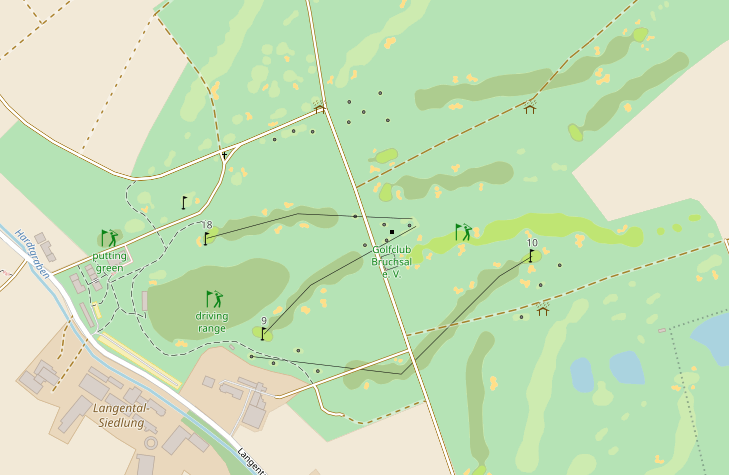

This said it would be very easy to setup a tile-server with localized rendering in any target language using Latin script. For this purpose you might not even need to use the German Mapnik style as I even maintain a localized version of vanilla OSM Carto style.

Actually I have a Tileserver running this code with English localization at my workplace.

So as for a map with English localization http://www.openstreetmap.us/ or

http://www.openstreetmap.co.uk would be the right place to host such a map.

So why not implementing this on osm.org? I suppose that this should be done as part of the transition to vector tiles whenever this will happen. As the back-end technology of the vector-tiles server is not yet known I can not tell how suitable my code would be for this case. Likely it might need to be rewritten in C++ for this purpose. As I already wrote, I have a proof of concept implementation written in python which can be used to localize osm/pbf files.

I took the chance to go for

I took the chance to go for

Neueste Kommentare